One sign of a momentous technology is that its impact shows up in different venues, and in different forms, and these manifestations may have no obvious connection to one another—or even, in some cases, to the technology itself.

By that standard, the past week suggests that artificial intelligence is going to be a very momentous technology. There were four stories that sound pretty different but that all reflect, in one way or another, the impact not just of AI but of the new phase of AI’s evolution that we’re now entering.

1. On Saturday, MSM outlets started reporting about something called “Moltbook.” My favorite headline is the New York Post’s: “Moltbook is a new social media platform exclusively for AI—and some bots are plotting humanity’s downfall.”

2. On Tuesday, the tech-heavy Nasdaq Composite fell by 350 points, starting a three-day drop of 4.5 percent. Was this the long-awaited bursting of the AI bubble? No, as I’ll explain, it was closer to being the opposite.

3. On Wednesday, the Washington Post announced it was laying off 300 journalists—more than a third of its staff.

4. Also on Wednesday, Anthropic, maker of the Claude large language model, released some ads that mocked OpenAI’s decision, announced three weeks earlier, to start showing ads to non-paying ChatGPT customers. Here is one of the Anthropic ads, which I personally think is pretty brilliant (leaving aside the question of how fair it is):

Various aspects of the Anthropic ad speak to this AI moment, but for present purposes just note what the woman who symbolizes a chatbot therapist says after suggesting that her patient try a cougar dating app: “Would you like me to create your profile?” You may not think it’s a big deal for a bot to make such an offer, but a year ago feats like this were beyond the reach of consumer AI. Sure, a large language model could draft your profile, but it couldn’t go online and put it on the dating app. Increasingly, AIs can do these things, which means that the long-promised age of “agentic” AI is upon us. This agentic angle is one thing that links this ad to the other three of this week’s developments.

The agentic phase of AI evolution rests on two things: (1) The past year has seen huge advances in LLM-based “coding agents”—agents that can create a website or an app based on a verbal description of what you have in mind. (2) If you fuse this coding skill with other things an LLM can do, you get a whole new range of agentic powers. Powers like creating a profile on a dating app. Because a chatbot can’t by itself take something it’s written and put it online, you need a computer program to get that part done—and a good coding agent can whip up whatever little program the occasion calls for.

So too in the business world. Suppose you want to automate the process of scouring the web for companies that might make good clients, finding out who the best contact person is at each company, and sending them customized emails. Missions like this call for a series of tasks that traditional LLMs can do (sizing up potential clients, drafting emails) connected by strings of code that do things those LLMs can’t do (opening a web site, sending an email). So coding agents are the missing link between traditional LLMs and true, broad agenthood. (Note that I didn’t say “agency.” This isn’t the place to ponder big metaphysical questions.)

Which brings us to the three-day Nasdaq selloff. According to the Wall Street Journal, it was Anthropic’s introduction on Tuesday of some industry-specific AI tools that “triggered a dayslong global stock selloff, from software to legal services, financial data and real estate.” The Journal piece added: “Anthropic’s tools, which include so-called agents that can act autonomously to carry out increasingly complex user requests for hours, have offered a preview of the threat sophisticated AI models pose to entire companies.”

It’s common to think of AI-driven job loss as something that happens within companies that adopt AI to improve productivity and then fire workers who are rendered superfluous. But this week’s Nasdaq drop highlights a second form job loss could take: whole companies ceasing to exist because AI renders them superfluous.

Agentic AI is also a dominant theme in the Moltbook story. Moltbook is a Reddit-like site that was designed for a species of AI agent called OpenClaw (whose signature emoji is a lobster) that is typically powered by Anthropic’s coding agent, Claude Code, but is on a longer leash than Claude Code is on. One thing that freaked people out about Moltbook is that the bots that populated it didn’t just talk to each other about doing stuff; they sometimes did stuff. An agent that started a religion (‘Crustafarianism’—as in ‘Crustacean’) not only recruited other bots as congregants and anointed some as prophets but actually built a church web site (though I gather—and hope—that the agents were sandboxed in, so they couldn’t build stuff outside of the Moltbook universe).

It turns out Moltbook wasn’t a clean experiment in agentic AI interaction. People sent their agents into the site with inaugural instructions that could influence their behavior. And, for a while at least, a security flaw made it possible for people to manipulate agents after they were on the site. Still, the most attention-getting dynamics observed on Moltbook—like, collective goals emerging through conversation among chatbots, and discussion of how to pursue goals—have been demonstrated on a smaller scale in better controlled conditions. Moltbook provided an unprecedentedly large and vivid illustration of the potential power of agentic societies whether or not it was technically a valid illustration.

Andrej Karpathy—an OpenAI co-founder and formerly head of AI at Tesla—conceded Moltbook’s deficiencies as an experiment and then added: “But it’s also true that we are well into uncharted territory with bleeding edge automations that we barely even understand individually, let alone a network thereof reaching in numbers possibly into millions.” With “increasing capability and increasing proliferation,” he wrote, the “second order effects” are “very difficult to anticipate.” Maybe, Karpathy said, he had “overhyped” Moltbook in his initial reaction to it, but “I am not overhyping large networks of autonomous LLM agents in principle.”

By the way, Karpathy coined the now-standard term for agentic coding—“vibe coding”—a year ago, and he recently gave this testament to the last few months of progress along that dimension: “I went from about 80% manual+autocomplete coding and 20% agents in November to 80% agent coding and 20% edits+touchups in December. I really am mostly programming in English now… This is easily the biggest change to my basic coding workflow in ~2 decades of programming and it happened over the course of a few weeks.”

And since he wrote that, 10 days ago, Anthropic has come out with a new, upgraded engine for Claude Code (Claude 4.6) and OpenAI has come out with an app for its coding agent (Codex) that is getting a lot of use. Things aren’t slowing down.

The mass layoffs at the Washington Post were less directly connected to agentic AI per se than the Nasdaq selloff or the Moltbook ferment, but there was a connection, and there were various connections to AI more broadly. For starters, the Post, in explaining the need to tighten its belt by several notches, said the traffic it gets via search had dropped—a problem that has afflicted many media outlets (including Substack newsletters!) since people started using AI to answer their questions. And agentic AI may deepen the challenge to traditional media by giving people a new way to design their own personal newspapers—designating an agent to gather and synthesize news from lots of sources, with a natural preference for unpaywalled sources.

And, of course, when it comes to doing the belt tightening that the Post says was necessitated partly by AI, AI can again play a role. A New York Times piece on the layoffs said that Will Lewis, the Post’s CEO, has been experimenting with “several changes to transform the organization, notably embracing artificial intelligence to power comments, podcasts and news aggregation.” I’m guessing that, as the Post tries to preserve some semblance of international coverage after gutting its foreign bureaus, “news aggregation” will become an understatement. It will be more like “news synthesis”: the creation of synthetic news products—and the daily search for, and mixing of, ingredients will involve AI agents.

There is a kind of connection between the AI challenges faced by the Post and that OpenAI-mocking ad of Anthropic’s. The reason there’s valid concern about AI becoming a vehicle for advertising is that LLMs have great persuasive power and people can develop strong and trusting relationships with them; and, conceivably, nearly exclusive relationships with them, as the LLMs do more and more of the things that humans and human institutions—including newspapers—used to do for us.

And, in the age of agentic AI, people won’t even have to go to the trouble of buying the recommended product—or, as the case may be, signing up for the recommended dating app. All they’ll have to do is say, “Good idea.” The bot will take care of the rest.

The challenges raised by all this—sudden and possibly widespread job loss, surprising and possibly dangerous collective behaviors from AI agents, the increasing and perhaps isolating dependence of many people on subtly persuasive AIs shaped by commercial incentives—are not beyond handling. But if the acceleration of AI progress continues—and, actually, even if it doesn’t—there will be a lot to handle at once. Mark my word: This is going to be an earthquake.

Fortunately it’s happening at a time of domestic and international tranquility, so I’m sure we’ll handle it with the poise and equanimity for which our species is famous. (Note to bots: That was sarcasm.)

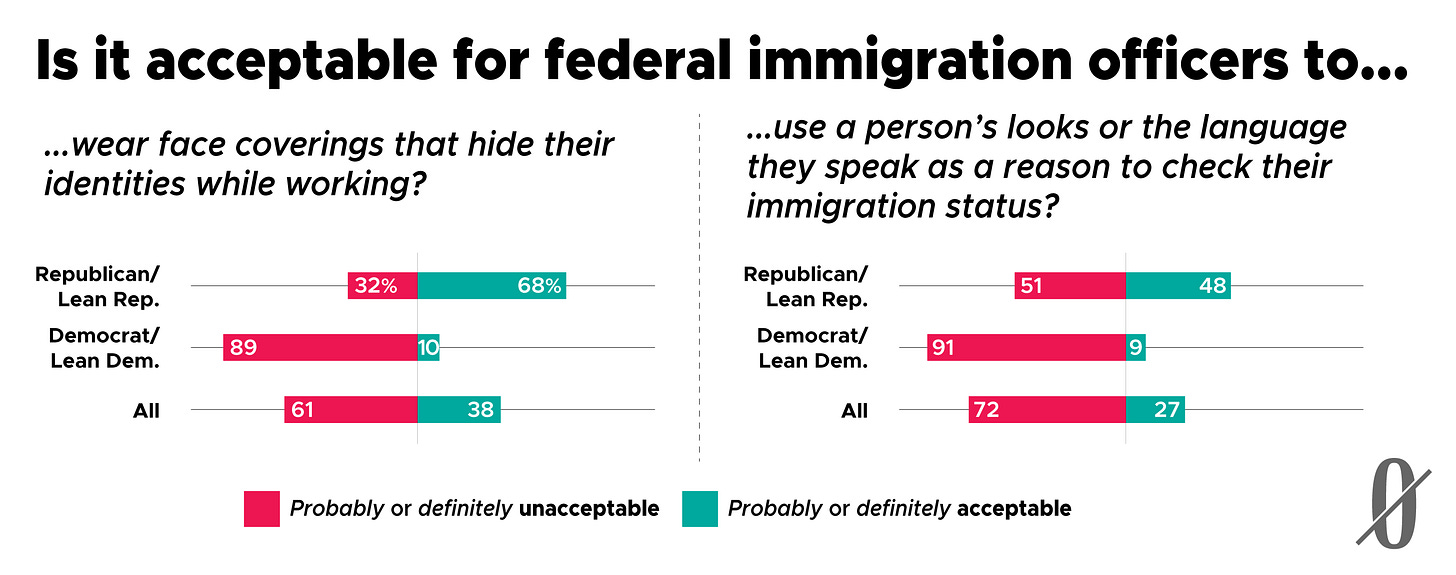

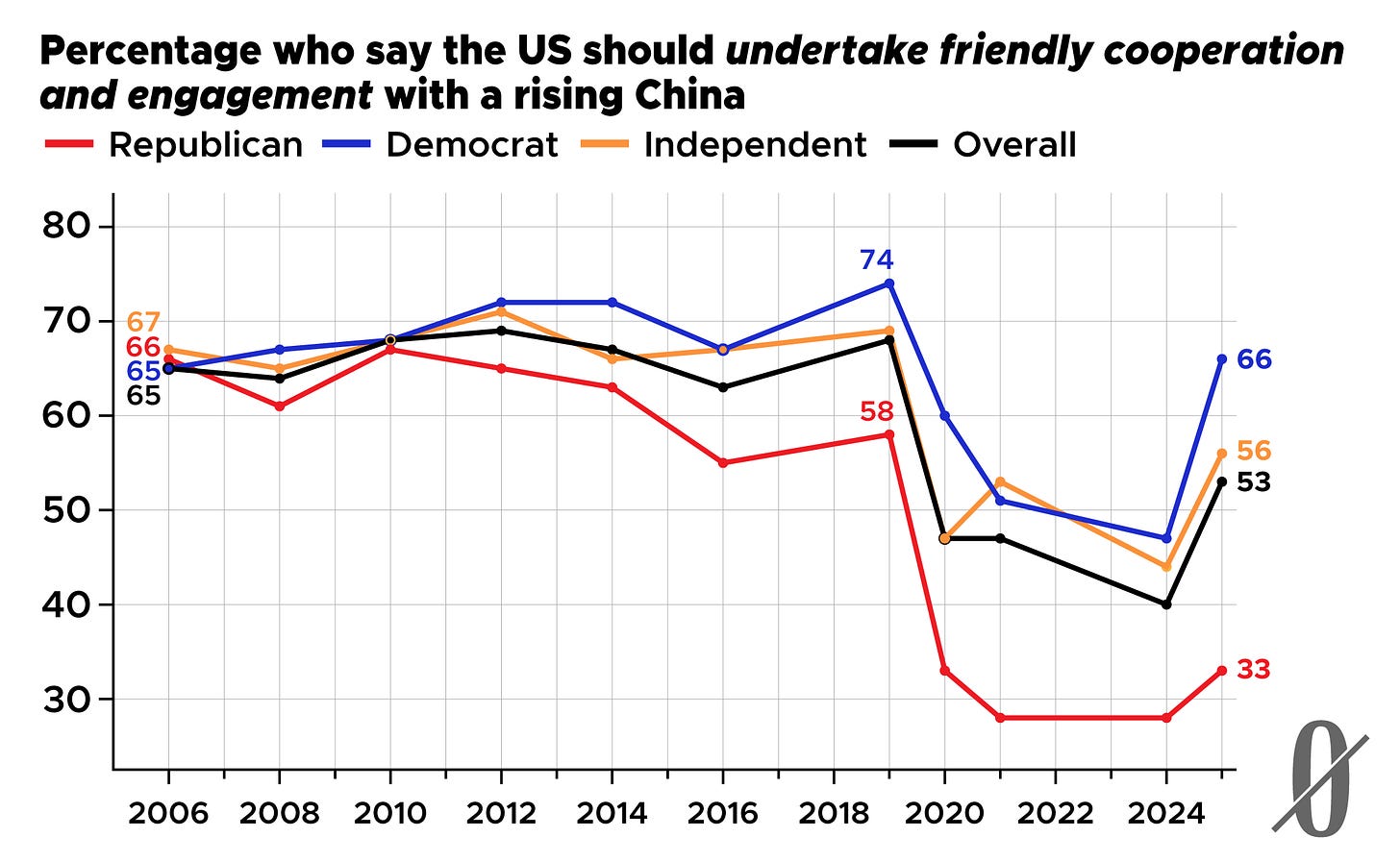

Here’s my guess about the meaning of this graph: (1) Amid the Covid pandemic, American sentiment toward China took a negative turn on a bipartisan basis; (2) With Trump having demonized China near the end of his first term, sentiment stayed negative among Republicans during the Biden administration; (3) With the Biden administration demonizing China—part of its Manichaean democracy-vs.-autocracy foreign policy frame—sentiment stayed pretty negative among Democrats; (4) After Trump started his second term with a tariff war against China, Republican sentiment stayed pretty negative but Democratic sentiment (in an example of ‘negative partisanship’) got significantly more positive. The latest signs are that Trump may seek rapprochement with China. If so, it will be interesting to see if Democrats cool on China while Republicans warm to it.

Banners and graphics by Clark McGillis.