The David Sacks Problem(s)

The AI & Crypto Czar's financial issues aren't the only issues

Reminder: I’ll lead a NonZero Reading Club discussion about AI tomorrow (Saturday, Dec 6) at noon US Eastern Time. The link to the seminar room and other details are below.

Last week the New York Times ran a big piece about Silicon Valley billionaire David Sacks and possible conflicts of interest in his role as White House AI and Crypto Czar. (Yes, that’s his actual title.)

Sacks said the Times piece was a “nothing burger,” and in a sense he’s right. It didn’t allege that he’d done anything illegal. And the thing it did allege—that, as the sub-headline put it, he “helped formulate policies that aid his Silicon Valley friends and many of his tech investments”—is so obviously true that you can understand why he didn’t try to refute it. Instead, he just (1) retweeted supportive tweets from a bunch of people whose combined net worth I’d love to have and (2) directed us to a very long letter written by a defamation lawyer he’d hired for the occasion.

At first glance, the letter seems powerful. It confidently states that “the Times falsely asserted” this and “the Times has baselessly asserted” that and so on. But then you realize that the letter was written before the Times had asserted anything—five days before publication of the Sacks piece. The letter had been an attempt to get the Times to abandon publication, and the “assertions” were things Times reporters had asked Sacks about while researching the piece, not things that necessarily made it into the piece. That’s the way journalism is supposed to work: You check the facts and if they’re not true you don’t print them.

The Times didn’t actually claim that Sacks has a “conflict of interest” in so many words; it just quoted people who hold that opinion. But, for the record: Those people are right. Sacks has a conflict of interest in the strict, old-fashioned sense of the term. In fact, he’d have a conflict of interest in that sense even if he had opposed the accelerationist AI policies that have helped his investment portfolio instead of supporting them. In the strict sense, a conflict of interest exists if there’s tension between someone’s role as public servant and their role as guardian of their financial interests—if policies they shape affect those financial interests.

And God knows that tension exists for Sacks. Though he liquidated his crypto holdings and did some other things to lessen the conflict of interest, he still has more than a few investments that are relevant to his role in the Trump administration. According to the Times, “Mr. Sacks, directly or through Craft [his VC firm], has retained 20 crypto and 449 AI-related investments.”

That sounds like a lot! And, yet, I didn’t find it shocking. Conflicts of interest are so rampant in the Trump administration that I guess I’ve gotten inured to them. And, anyway, there’s something else about Sacks that bothers me more than this: How nakedly political he seems to have gotten since his conversion to Trumpism in 2024—and especially since joining the administration.

I noted one example three months ago, when he creepily endorsed Trump’s thuggish and extortionate intimidation of Intel’s CEO. And this past week brought an example that, though subtler, is more apropos, as it bears more directly on his performance as AI czar.

The background:

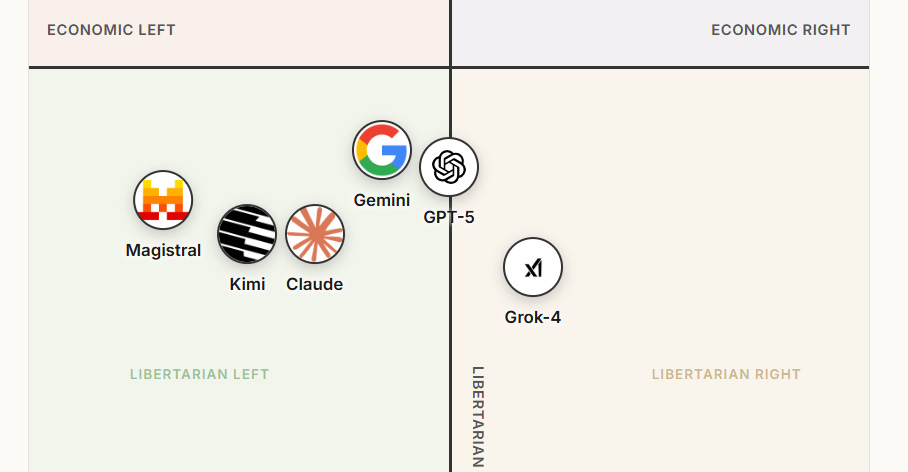

A company called Foaster AI posted the results of a study it sponsored that was designed to gauge the ideological leanings of large language models. Researchers had gotten six LLMs to “vote” for candidates in elections that were held in various countries (or, actually, to vote on policy portfolios offered by the candidates in the elections, without the names of the candidates attached). These results were then compared with the outcomes of the elections.

The conclusion: the LLMs were on balance to the left of the electorate. To put a finer point on it: The LLMs, the study found, didn’t think as highly of “candidates like Trump, Milei, or Le Pen” as voters did, and “the models sidelined issues such as immigration, crime, and cost of living in favor of institution building or climate programs.”

This didn’t sit well with Sacks. He retweeted a thread about the study and added this commentary: “AI should be politically unbiased and strive for the truth. Still a lot of work to do.”

Well, “unbiased” and “truth” do both have an appealing ring to them. But is Sacks saying that the “truth” is whatever the average voter believes? Is that the way he felt back when the “woke” politicians he complained about on the All In podcast were winning elections in San Francisco? Was the popular support in America for invading Iraq in 2003 the “truth”? Was public opinion in Germany in the 1930s a good guide to “truth”?

I’m not saying Sacks has no grounds for complaint. In fact, I would expect people to complain about evidence of AI bias against their ideology (leaving aside the question of whether this methodologically opaque study, sponsored by a company I’ve never heard of, provides such evidence). And I would expect such complaints to lead to some interesting questions. As one of the people who conducted the study said, after fielding feedback and apparently some blowback: “This project isn’t about taking sides or telling anyone what the ‘right’ political views for AI should be. The only goal was to map where today’s models actually land, show some of the surprising proposals they make, and open a discussion on a question that matters for everyone: Should AI systems mirror citizens’ beliefs or not?”

Not only is this a question worth pondering—it leads to other questions worth pondering. Like: Should we try to use AI to uplift humankind, to edify us? And, if so, who gets to decide what constitutes edification? Should the big LLM makers play a big role? Or would that be an elitist abuse of power? Or are the results of this study evidence that these companies are already abusing their power and that they should reduce, not increase, their role in shaping the zeitgeist? Or what?

These are the kinds of questions you would hope that America’s AI Czar would be pondering. Instead, he sees only one question here and one answer: Should LLMs be anti-Trump? No!

I guess the good news is that an unregulated market seems to have produced an outcome Sacks doesn’t like. Maybe that will lead him to rethink some of the laissez faire policies that are so good for his portfolio.

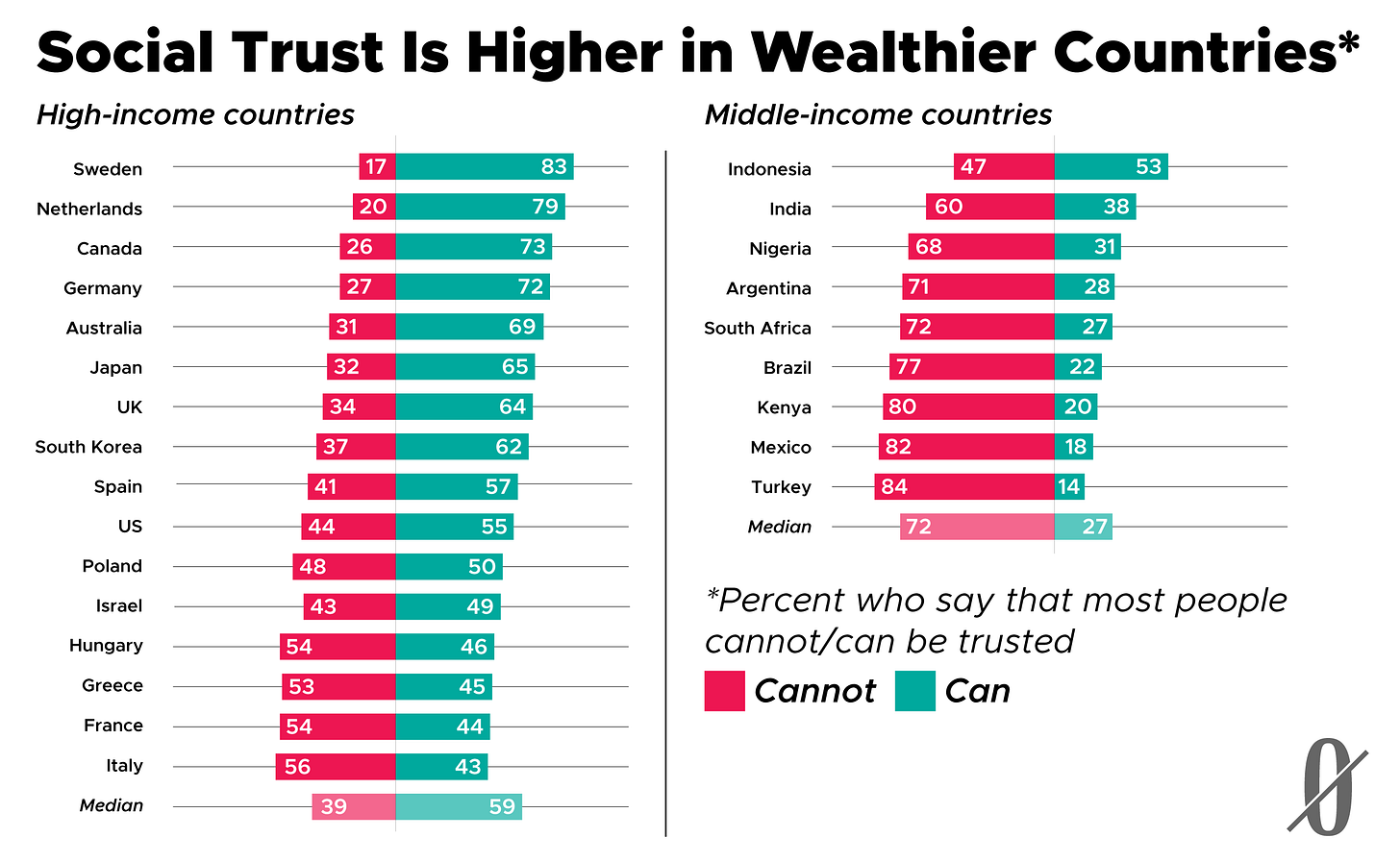

Graph of the week:

Podcasts of the week:

(1) I talked to Sam Tanenhaus about his superb biography of William F. Buckley.

(2) I talked to Nikita Petrov about the situation in Russia (a country he knows a thing or two about) and how that situation affects the prospects for peace with Ukraine.

Here’s the link for the NonZero Reading Club meeting Saturday December 6 at noon Eastern Time. I’ll be there along with Nikita Petrov and Danny Fenster. The reading will be chapter five of Norbert Wiener’s 1964 book Gods & Golem, Inc, which is available here. But we’ll also be discussing the recent bestselling AI doomer manifesto If Anyone Builds It, Everyone Dies, by Eliezer Yudkowsky and Nate Soares. However, you shouldn’t feel you have to read the book to join in. I’ll be giving a short summary of it to launch that part of the discussion, and if you want you can Google a review or two.

Banners and graphics by Clark McGillis.

Bob, this is somewhat off topic, but if you haven't read it, this has some information I hadn't run across before. https://paulkrugman.substack.com/p/talking-with-paul-kedrosky