The GPT-5 Era Is Here!

Plus: Trump’s new tariff fail, Israel's new escalation, the roots of AI evil, eco-unfriendliness, and more!

This week OpenAI released GPT-5, the very-long-awaited successor to GPT-4, which came out more than two years ago. There have been other OpenAI models that arguably deserved the title “successor”; there’s 4.5, not to mention models called o1, o3, and 4o (names that, when rendered in fonts whose lower-case o’s resemble zeroes, become even more confusing than they otherwise would be). But GPT-5 integrates the distinctive powers of the different OpenAI models under a unified user interface and brings significant new advances of its own. The overall effect isn’t enough to warrant serious discussion of whether the breathlessly awaited threshold of “artificial general intelligence” has been reached. But it’s enough to sustain confidence that the trajectory of AI progress will continue: More and more AI power, in more and more useful forms, will be available to more and more people at lower and lower prices, with growing social, economic, political, and geopolitical impact. So, in acknowledgment of this moment, we begin this week’s Earthling with a few items that are about either GPT-5 itself or issues raised by ever-more-powerful AIs.

Ethan Mollick, a Wharton professor who has developed a reputation as an AI connoisseur and a straight shooter, gives GPT-5 a positive review. He’s particularly impressed by two of its skills: (1) “vibe coding”—creating software via natural language prompts (“Make me an app that…” or “Make me a video game that…”) with little corrective guidance needed; (2) pro-activeness: GPT-5 will “suggest great next steps” and in other ways lighten your load, he writes. “It is impressive, and a little unnerving, to have the AI go so far on its own. To be clear, humans are still very much in the loop, and need to be. You are asked to make decisions and choices all the time by GPT-5, and these systems still make errors and generate hallucinations.” But progress will march on. “The bigger question,” Mollick writes, “is whether we will want to be in the loop.”

Various studies have found that large language models, in pursuing their assigned goals, may engage in tactically useful deception or other misbehavior. In Science, AI researcher Melanie Mitchell argues that, though it’s tempting to explain such conduct by invoking “humanlike motives,” these LLMs may just be role-playing scenarios found in their training data—or, in some cases, exhibiting side-effects from the phase of training known as “reinforcement learning from human feedback.” Still, bad behavior is bad behavior. Mitchell writes, “Some researchers, including me, believe that the risks posed by these problems are dangerous enough that, in the words of a recent paper, ‘fully autonomous AI agents should not be developed.’”

Researchers at Anthropic say they have new ideas about how to make large language models less inclined toward hallucination—and, for that matter, toward evil. First, they isolate “persona vectors” in the model’s neural network—patterns of neuronal activation that correspond to “character traits,” such as a tendency toward sycophancy, hallucination, or bad behavior in general. Then, they expose the model to clusters of data and watch to see if the vector for an undesirable tendency is activated. If so, they may exclude that dataset from the training of other models. A very rough analogy: You put people in an MRI machine, show them a bunch of pictures, and, if a picture activates a part of the brain associated with fantasizing about mass murder, you decide not to include that picture in elementary school curricula.

This week on the NonZero Podcast I had what I considered a productively contentious conversation with Jordan Schneider, host of the influential podcast China Talk (known as “China Hawk” to some of its dovish detractors). Jordan, by the end of the conversation, clearly didn’t agree about the “productively” part. If you have time to check out the conversation—either via the NonZero podcast feed or the NonZero YouTube channel—feel free to let me know in the comments section whether you agree with him that I was pointlessly confrontational. (Doesn’t sound like me!)

--RW

President Trump has lately been using tariff threats—which he originally issued in the name of US economic security—to pursue other goals as well, and so far he’s having little success. His demands that China and India quit buying Russian oil or else face steep tariffs have been rejected over the past 10 days (as “coercion and pressuring” in Beijing, and as “unjustified and unreasonable” in Delhi). And the 50 percent tariff recently levied on Brazil—punishment for the government’s prosecution of former President Jair Bolsonaro, a Trump ally—hasn’t helped Bolsonaro and, judging by a statement that Beijing issued this week, may have strengthened ties between Brazil and China.

China’s “ambiguous stance” toward Russia’s invasion of Ukraine stems from disagreement among Chinese elites, writes Da Wei of Tsinghua University in Foreign Affairs. Some elites lament Russia’s violation of Ukrainian sovereignty and draw comparisons with past invasions of Chinese territory, while others view the 2022 invasion as an understandable response to the kind of western “encirclement” that may threaten China, too. But, Wei writes, both sides agree on one thing: China’s policy on the war is one of neutrality—of continued economic engagement with both combatant nations and their allies—and is being mischaracterized in the West as support for Russia over Ukraine.

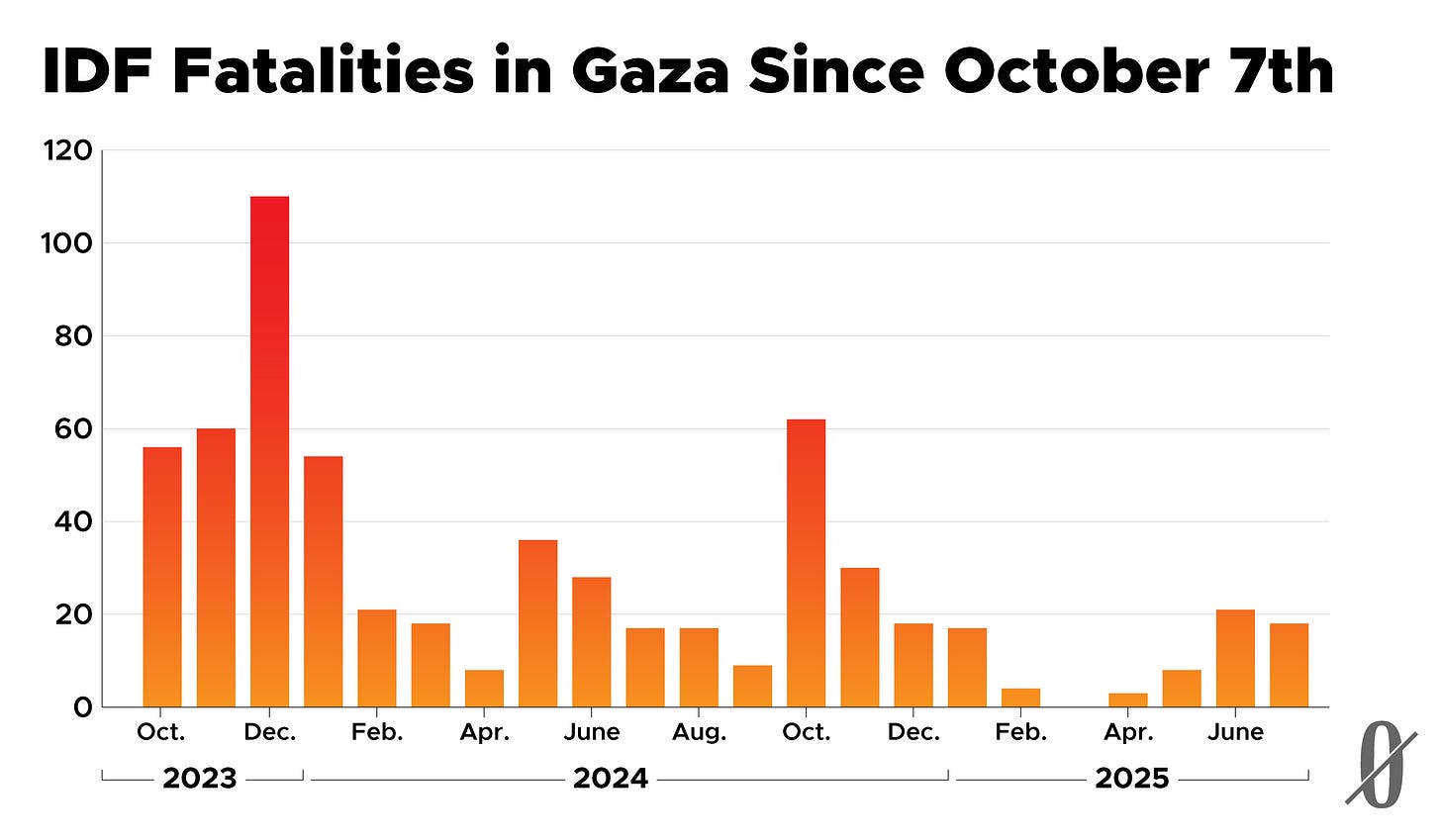

Israel’s Security Cabinet voted to seize and occupy Gaza City—apparently the first step in a plan to, as Prime Minister Benjamin Netanyahu put it on Thursday, "take control of the whole of Gaza.” Some 600 former Israeli security officials signed a letter earlier in the week calling on Trump to pressure Netanyahu to end the war, citing "our professional judgement that Hamas no longer poses a strategic threat to Israel." Netanyahu said that, though his goal is to take control of Gaza, Israel doesn’t “want to govern it” but rather hopes to someday “hand it over to Arab forces.” So far no Arab forces have volunteered for the job.

In response to Israel’s decision to expand its occupation of Gaza, German Chancellor Friedrich Merz announced that “the German government will not authorize any exports of military equipment that could be used in the Gaza Strip until further notice.” The policy change has only minor military implications but major symbolic ones, since, as the New York Times observes, Germany since World War Two has “made the survival of Israel one of its own basic principles of state.” Israeli Prime Minister Benjamin Netanyahu accused Germany of "rewarding Hamas terrorism."

For the sixth and possibly final time, world leaders are meeting to set terms of the planet’s first plastics pollution treaty. Previous efforts stalled when oil-producing nations resisted constraints on plastics production favored by most other nations. The danger plastic compounds pose to human health is “hotly contested,” but banning the most dubious ones and requiring greater industry transparency is the minimum reasonable step, argues journalist Oliver Franklin-Wallis in the New York Times.

Trump’s Office of Management and Budget asked the Environmental Protection Agency to terminate nearly $7 billion in grants meant to put solar panels on the homes of low- and moderate-income families, CBS reports. The “Solar for All” program, a legacy of the Biden administration, is part of $27 billion in incentives for domestic green energy development that critics on the right have dismissed as a “slush fund for the EPA and favored nonprofits.”

People who consult ChatGPT about the likely future price of a stock are less accurate in their predictions, yet more confident of them, than people who consult other people instead—at least, if the findings of a study published in Harvard Business Review are generalizable. The findings may well not be. For one thing, though the study was controlled and randomized, it dealt only with one stock—Nvidia—over a one-month period. Still, the authors are probably right when they warn against trusting sources that, like ChatGPT, “sound authoritative”—and probably right when they add that “this also applies to our very own study.”

A “Virtual Lab” of AI research agents, each with expertise in a different domain, discovered several new antibody fragments that could help neutralize current variants of the Covid virus, a paper in Nature reports. Some important fine print: a human researcher provided “high-level feedback” to the agents. Still, the authors say, the AI agents, which had specialties ranging from immunology to computational biology to machine learning, were “able to conduct extensive discussions with relatively minimal input from the human researcher, and the individual identities of the agents contributed to a comprehensive, interdisciplinary discussion.”

Graphics, banners and images by Clark McGillis.

You were confrontational enough that I got significantly more clarity on the issues. So no! Not pointless.

The interview with Jordan was excellent, Bob!

At some point, will Nonzero reckon with what many AI skeptics are saying? GPT-5 has been a bit of a bust...

This DeBoer article is a good summary. The Rooses of the world are just handwaving way too much at this point. https://substack.com/@freddiedeboer/note/c-144755235?utm_source=notes-share-action&r=2cu6v